Anti-hate action, legislative activity, and inevitably FOB updates

The CheckStep Round-Up is a monthly newsletter that gives you fresh insights for content moderation, combating disinformation, fact-checking, and promoting free expression online. The editors of the newsletter are Kyle Dent and Vibha Nayak. Feel free to reach out!

This month we’ve added a new “Expert’s Corner” feature starting with an interview with our own Kyle Dent, who recently joined CheckStep. He answers questions about AI ethics and some of the challenges of content moderation.

The content moderation news this month includes anti-hate action as well as lots of legislative activity. We’ve got stories about Section 230 of the CDA, Florida lawmakers who want to limit social media platforms’ ability to set their own policies, and European antitrust and AI regulatory enforcement. And what would our new reality be without updates on the Facebook Oversight Board? Don’t worry we’ve got that too.

Expert’s Corner with Kyle Dent

1. With your extensive work around AI ethics, how would you address the topic of efficiency & AI? Particularly when we see articles about AI moderation being better than human moderators?

We need to be skeptical of claims that AI performs better than humans. It's been a common boast, especially since the newer bidirectional transformer models have come out, but the headlines leave out a lot of the caveats.

Content moderation, in particular, is very context dependent and I don't think anyone would seriously argue that machines are better than humans at understanding the nuances of language. Having said that, AI is a powerful tool that is absolutely required for moderating content at any kind of scale. The trick is combining the individual strengths of human and machine intelligence together in a way that maximizes the efficiency of the overall process.

2. What is the most shocking news you’ve come across with respect to hate speech/misinformation/spam? How would you have addressed it?

Actually, I think hate speech and disinformation are themselves shocking, but now that we've moved most of our public discourse online, we've seen just how prevalent intolerance and hatred are. I'd have to say that the Pizzagate incident really woke me up to the extent of disinformation and also to the possibility of real-world harm from online disinformation. And, of course, it's really obvious now how much racial and other marginalized groups like LGBTQ populations suffer from hate speech.

The solution requires lots of us to be involved, and it's going to take time, but we need to build up the structures and systems that allow quality information to dominate. There will still be voices that peddle misinformation and hate, but as we make progress hopefully those will retreat back to the fringes and become less effective weapons.

3. How has the dissemination of misinformation changed over time?

Yeah, that's the thing, this is not the first time we as a society have had to deal with a very ugly information space. During the mid- to late-1800's in the United States there was the rise of yellow journalism that was characterized by hysterical headlines, fabricated stories, and plenty of mudslinging. The penny papers of that day were only profitable because they could sell advertising and then reach lots of eyeballs. All of which sounds a lot like today's big social media companies. Add recommendation algorithms into today's mix and the problem has become that much worse. We got out of that cycle only because people lost a taste for the extreme sensationalism, and journalists began to see themselves as stewards of objective and accurate information with an important role in democracy. It's still not clear how we can make a similar transition today, but lots of us are working on it.

4. Where do you stand with respect to the repeal of Section 230?

As a matter of fact, I just read an article in Wired Magazine that has me rethinking Section 230. I still believe it wasn't crazy at the time to treat online platforms as simple conduits for speech, but Gilad Edelman makes a very compelling argument that liability protection never had to be all or nothing. The U.S. courts are actually set up to make case-by-case decisions that over time form policy through the body of common law that results, which would have given us a much more nuanced treatment of platforms' legal liability. Edelman also says, and I agree with this, it would be a mistake to completely repeal Section 230 at this point. We can't go back to 1996 when the case law would have developed in parallel with how we evolved in our use of social media. Section 230 definitely needs adjusting, because as things stand, it's too much of a shield for platforms that benefit from purposely damaging content like sexual privacy invasion and defamation. The key thing to any changes, though, is that they don't overly burden small companies and give even more advantage to the big tech platforms who have the resources to navigate a new legal landscape.

5. Why the move from a tenured corporate career to a small startup?

You sound like my mother. (Just kidding, she's actually very happy for me.) I'm mainly really excited to be focused on the problem of disinformation and dealing with toxic content online. I think we're doing great things at CheckStep, and I'm very happy to be contributing in some way to developing the quality information space the world needs so badly.

If you would like to catch up on other thought leadership pieces by Kyle, click here.

CheckStep News

📣 CheckStep is excited to announce that it’s one of the 10 high potential ventures to be selected to join Cohort 4 of the OXFO Elevate accelerator.

📣 CheckStep’s Co-Lead of Research Isabelle Augenstein and QCRI’s Preslav Nakov are amongst the guest editors working on a special issue of the Cambridge University Press Journal Natural Language Engineering, about hate speech detection on social media platforms. The deadline for all submissions is 31st Aug, 2021

Moderating the Marketplace of Ideas

⚽ 🥅 🏉 Social media boycott: Football clubs, players & sporting bodies begin protest

A large and growing coalition of athletes and sporting bodies banded together for a four-day boycott of social media calling on platforms to beef up their efforts towards blocking racist and sexist abuse against players. Separately, the PFA Charity and Kick It Out released their report analyzing targeted abuse. It’s not pretty.

💵 Florida plans to fine social media for banning politicians

Meanwhile, legislators in Florida want to keep the internet safe for bullies and hate speech. They have already approved a new law that would fine social media companies for banning politicians from their platforms. It just needs the governor’s signature before the courts get to strike it down.

😨 Facebook moderator: ‘Every day was a nightmare’

Isabella Plunkett, a Facebook moderator, testified for the first time about the horrors exposed to the platform’s moderators. The testimony included signing off on a PTSD disclaimer and being offered a mere 1.5 hours with a “wellness coach” to help overcome anxiety issues as a result of watching graphic content every day.

👨💻 Machine-learning project takes aim at disinformation

A must read interview by Preslav Nakov about tackling disinformation. He talks about his project Tanbih, who’s main purpose is to fact-check the news before being made available to the public.

📵 Social app Parler is cracking down on hate speech — but only on iPhones

Parler is allowed back on the App Store, but only because they promise to be nicer. Apple customers looking for incitement and hate speech will have to find it elsewhere.

Disinformation for fun and profit! A new Graphika report details an extensive and highly coordinated disinformation network apparently controlled by the Chinese businessman, Guo Wengui. The network advances both ideologically motivated misinformation and promotes Guo’s business interests.

✍️ 🤦 The world regulates Big Tech while U.S. dithers

Legislators in the U.S. can’t even get consensus on who’s allowed to vote, so yeah, the E.U. is taking the lead on big tech antitrust investigations, content moderation regulations, and AI governance.

🤷 Everything You’ve Heard About Section 230 Is Wrong

In-depth explanation from Wired about Section 230. Love it or hate it, you probably don’t really understand it (until you read this).

🧑⚖ Facebook, YouTube, Twitter execs testify in senate on algorithms

Lawmakers can’t seem to come to a consensus about the best course of action for resolving the increasing concerns surrounding the amplification of harmful content on platforms. Some of Big Tech’s executives testified before the Senate Judiciary about the role of algorithms in content distribution.

🏢 TikTok to open a 'Transparency' Center in Europe to take content and security questions

In an attempt to increase “transparency” about its content moderation process, the platform is to open a second transparency center in Europe, where experts and policymakers can get a better understanding of its trust and safety systems.

🛒 Amazon's algorithms promote extremist misinformation, report says

Amazon doesn’t quite agree, but the Institute for Strategic Dialogue recently reported that Amazon’s marketplace was filled with “white-supremacist literature, anti-vaccine tomes and books hawking the QAnon conspiracy theory”. Moreover, the platform’s recommendation algorithms often promoted such extremist content.

🙅 Oversight Board overturns Facebook’s decision to remove a post related to ‘genocide of Sikhs’

Facebook’s “Supreme Court” aka the Oversight Board overturned another one of Facebook’s decisions and asked for better representation of religious minorities. In other FOB news, Kate Klonick, a law professor and Facebook content moderation expert, discusses the much ballyhooed decision on the Trump ban. The upshot? The saga continues, but as always, she’s got great insights.

Remedying COVID-19 and Vaccine Misinformation

🎙️ Spotify’s Joe Rogan encourages "healthy" 21-year-olds not to get a coronavirus vaccine

With public health advice from Joe Rogan, you get less than you paid for. According to Media Matters, the highly influential podcaster is offering bad information to young adults.

📈 🖥️ India's Epidemic of False COVID-19 Information

The coronavirus ‘infodemic’ is only picking up steam as India continues to struggle through its second wave.

Regulatory News and Updates

🇺🇸 Opinion | Congress must decide: Will it protect social media profits, or democracy?

Two U.S. Representatives make the case for their Protecting Americans from Dangerous Algorithms Act that would modify Section 230 of the CDA. They argue that Facebook et al already know how to block content that amplifies terrorism and extremist content. They just need incentives that are better aligned with what’s good for the world.

🇬🇧 Online safety bill: a messy new minefield in the culture wars

The recently proposed Online Safety Bill is a landmark legislation introduced by the British government to tackle online harm. However, some argue that the bill promotes only government-dictated moderation, thereby making the job of moderators even more strenuous. This was not the only criticism, Martin Lewis, who’s regularly impersonated in online scams, wants to know how you can have a safety bill that doesn’t include safety fraud. He along with 15 other organisations called on lawmakers to include protection from the avalanche of online scams in the proposed Online Safety Bill now making its way to the committee stage.

🇪🇺 New rules adopted for quick and smooth removal of terrorist content online | News

The EU recently approved a law that requires online platforms to take down terrorist content within an hour's notice. In the same week, the Parliament entered into an agreement with Facebook and Microsoft to use their technology to screen and remove possible child sexual abuse material (CSAM) prevalent online.

CheckPoint Articles

📖 👓 How To Embed Trust and Safety In Your Company’s Operations

Digital trust and safety can be more than company values or product features, but rather holistic business processes. In this article, we explore insights from the content moderation industry and scholars such as Daphne Keller.

📖 👓 The Promise of AI Content Filtering Systems For Digital Marketplaces

Learn how AI powered content moderation systems can help with content filtering on digital marketplaces.

An opinion piece by Kyle Dent discussing the effectiveness of Facebook’s Oversight Board.

📖 👓 A Read Into the New Data Reporting Requirements in the Digital Services Act

What are the business implications of the EU's Digital Services Act? The article gives you an insight into how your company can better prepare itself before the implementation of the DSA.

Reading Corner

🔎 Multi-Hop Fact Checking of Political Claims

Keep an eye out for this paper at IJCAI-21. It offers insights into the multi hop reasoning capabilities of fact checking models. For this, the authors introduce a dataset with annotated chains of connected evidence pieces.

🔎 A Large-Scale Semi-Supervised Dataset for Offensive Language Identification

This paper due to appear at ACL-IJCNLP'2021 discusses the effectiveness of the newly created SOLID dataset, which contains over nine million labelled English tweets, and how it can be useful in detecting offensive language online, when used with the old OLID dataset which has limited applicability.

Tweets worth a second look

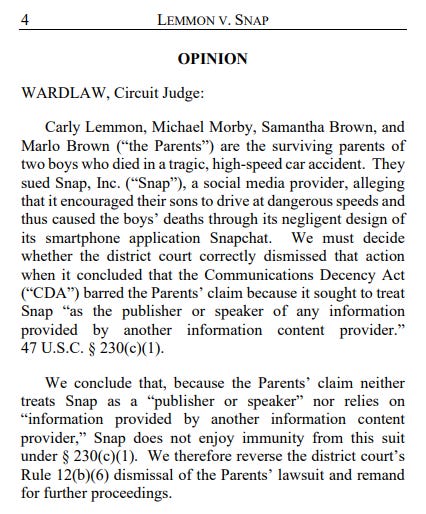

This seems like a pretty big Section 230 decision against Snap: cdn.ca9.uscourts.gov/datastore/opin…

To know more about CheckStep, please click here.